February 16, 2025

Robots.txt Generator

This blog talks about what Robots.txt is and why we decided to build the Robots.txt Generator on Konigle.

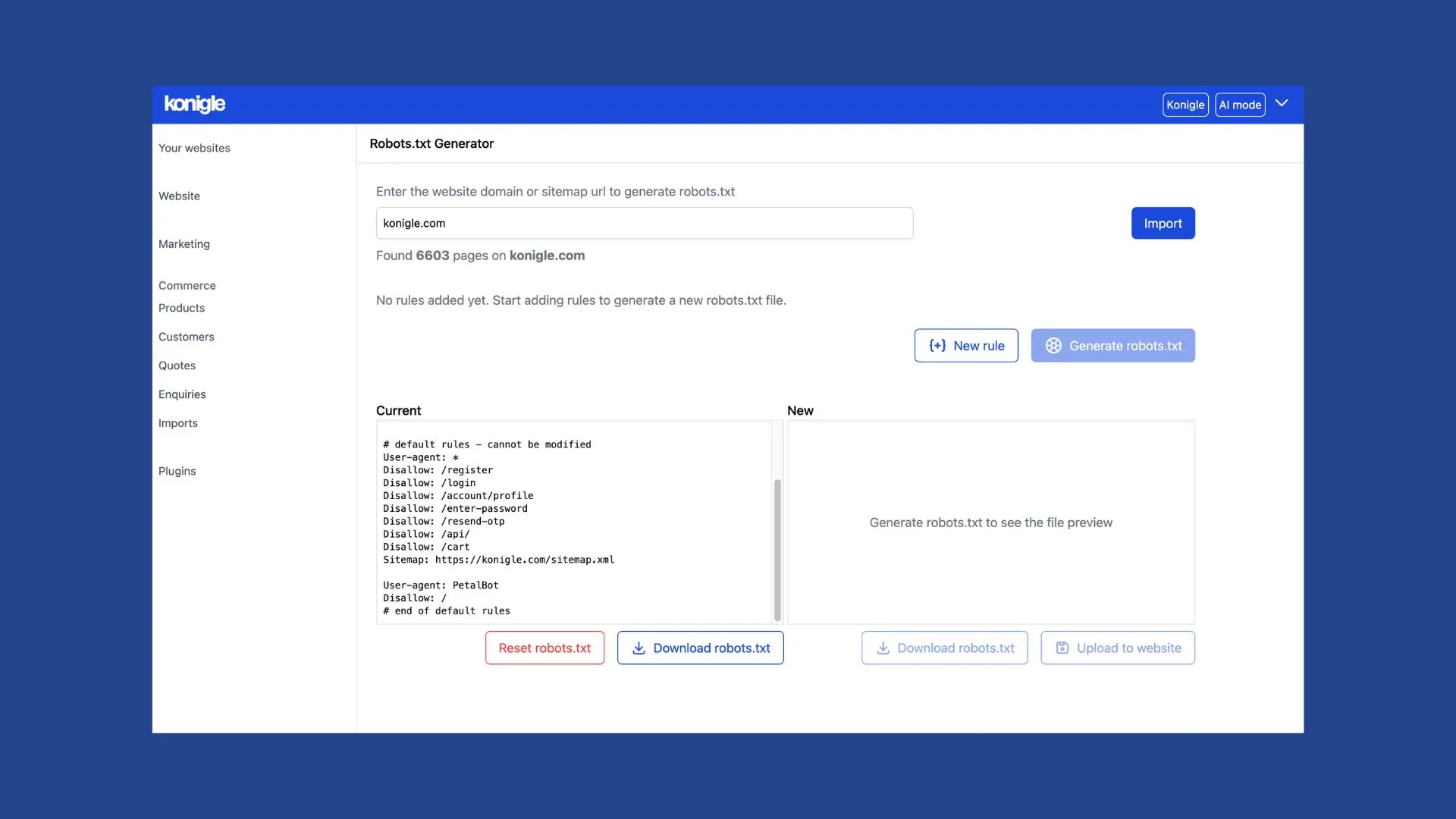

The Robots.txt Generator feature on Konigle helps make creating robots.txt file for websites easier and user-friendly.

On the internet, every website has a "silent gatekeeper." It's called robots.txt, a simple text file that tells search engine crawlers and AI bots which parts of your website they're allowed to access and which they should avoid.

While the concept is straightforward, its implications for your business are profound. A properly configured robots.txt file is a powerful tool for a busy SME owner, serving two critical functions that can give you a significant edge.

1. Directing Google's Attention for Better SEO

Just like you would guide a VIP through your office, you can guide Google's crawlers through your website. For larger sites, Google recommends using robots.txt to manage your "crawl budget." By blocking less important URLs (like login pages or internal-only pages), you signal to Google which pages are truly valuable. This significantly increases the chances that Google will focus its limited resources on indexing the pages that matter most for your business, ultimately helping them rank better.

2. Protecting Your Content from AI Scrapers

There is an increasing number of AI bots that scrape content from websites for training machine learning models or generating new content. If you want to protect your intellectual property and prevent these bots from accessing your content, robots.txt is the most effective tool.

You just need to know the specific user agent of the bot, and you can block it from accessing your site. The challenge, of course, is that identifying and blocking these bots requires you to know the correct syntax and implement it.

The idea behind robots.txt is simple, but the implementation is anything but. The syntax can be finicky, and one small error can lead to big problems. This is where Tim, aka Konigle AI, steps in to solve the problem for you.

We've built a native robots.txt generator and management tool, but your AI employee, Tim, makes it even easier. You don't have to navigate a complex plugin; you just have to ask via email.

- Default Protection is Handled: Every website built with Konigle comes with a default robots.txt file that automatically blocks pages that shouldn't be indexed (like login or checkout pages) and common "spammy" bots. This foundational protection is already in place for you.

- Add or Remove Rules: To get started with adding new rules or editing your file, just drop an email to Tim. You can tell him what you want to block or allow, and he'll handle the technical work, ensuring the rules are implemented correctly and your website is protected.

With Konigle AI, you gain the power to manage your website's SEO and protect your content from scraping—all without needing a technical background.

Beyond robots.txt, remember that Tim can handle a full spectrum of digital tasks for your business: managing website content, optimizing SEO, running email marketing campaigns, and more.